Beta Solutions Blog

Artificial Intelligence - A Closer Look

Date: Feb 28, 2019

Author: Morten Kirs

Introduction

Believe it or not, the concept of artificial intelligence (AI) has been around for quite a while. The first digital computer was developed in the 1940s, and with medicine at the time having discovered that the brain is effectively an electrical network of neurons, it wasn't long until research into developing an electronic brain was started. Perhaps due to not appreciating the full complexity of the problem, the original researchers sparked a bit too much hype and tended to over-promise on deliverables. This led to periods of funding cuts and lack of continued interest, known as "AI winters", followed by periods of resurgence whenever new developments arose. Recent developments in "deep" neural networks and developments in training through "big data" and machine learning techniques have sparked a new hype cycle. This time it appears that AI could be here to stay, as it seems to have proved that it can solve problems that humans have yet to solve.

What is AI and Where is it Used?

There is still a big difference between the application specific AI that is in the limelight today, and general AI, or the electronic brain, that has been theorised since the late 1950s. More than 60 years on we're still not expecting robots to have a hostile takeover of the world anytime soon. The application form of AI on the other hand is now everywhere. Whenever you post a picture on Facebook, complex image recognition AI will automatically find and tag you and your friends. Whenever you ask Siri or Alexa a question, speech recognition AI will translate your voice into appropriate commands. And eventually, when you jump into a car, AI will be able to take you to your destination without you ever touching the wheel.

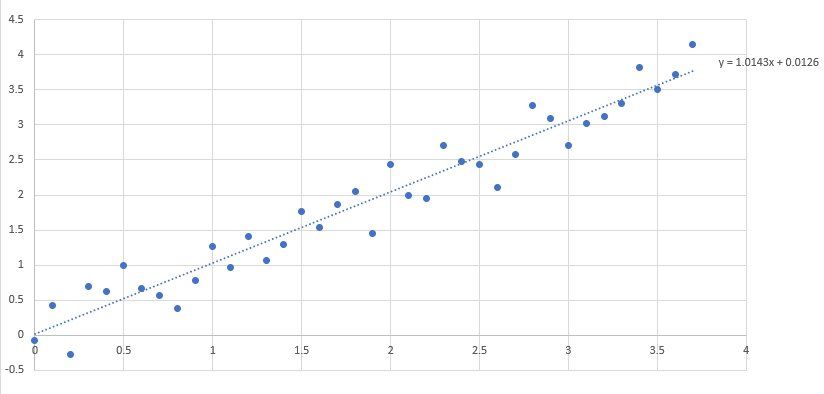

Despite its apparent complexity, at the heart of modern AI lies a more simple optimisation problem of curve fitting. If you've taken high school math or statistics, you likely would have encountered a similar problem on a 2D graph. Given a bunch of points (x,y), what is the line of best fit that describes the data set? Once you've found the line of best fit, you can then predict the output y, given any x value.

Figure 1. Simple line fit to known sample data. At the heart of AI lies this same data-fitting principle.

AI solves a problem much like this, except with a fair bit more calculation than you would have used in high school. AI is tuned with potentially millions of data points, where each point could have many inputs and many outputs. For example, an input may be an image with each pixel effectively being an input. An output from this could be a simple yes/no decision for a question such as "Is there a person in this image?", or it could be multiple outputs for coordinates for each person found in the image. AI finds a solution model that best fits the original data set, with the model potentially using thousands of coefficients. AI can then use this model to predict the result of any future inputs. The output of a model is usually a probability rather than a direct yes or no answer.

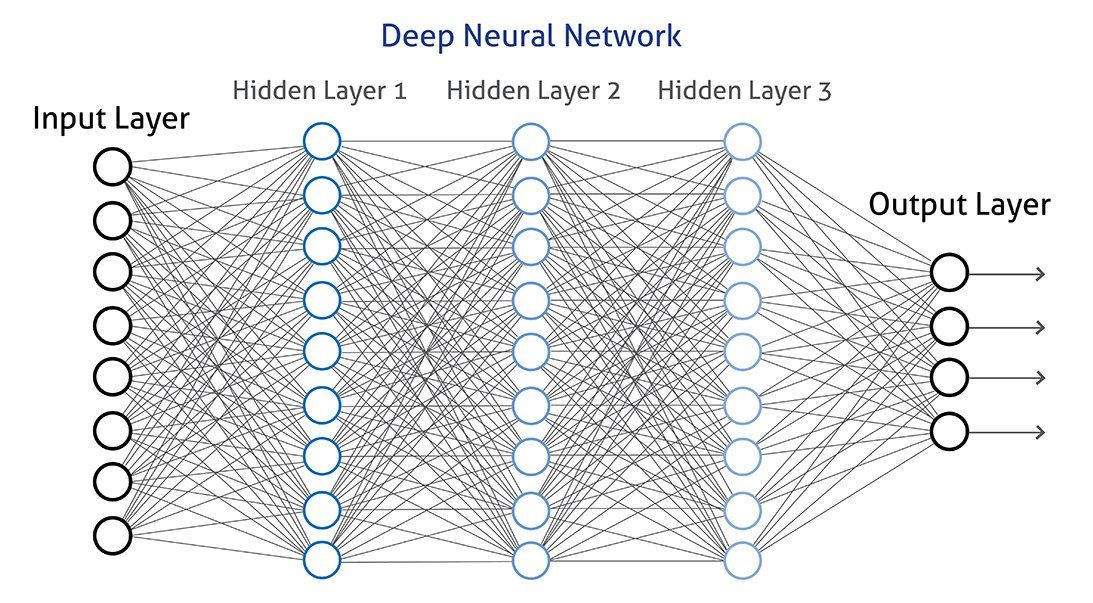

Modern advances into "deep learning" have shifted complex problems into neural networks. Each node has its own function and solution, and the output of one node is then fed into further nodes. Splitting the problem into nodes allows it to be optimised in parallel using modern GPUs or cloud based server farms. There are currently very many architectures available for deep learning, some of which are pictured below.

Figure 2. Example neural network architecture. In between the inputs and outputs lie multiple hidden layers.

Training AI and Machine Learning

Since the early days of AI, board games have been a simple but effective way of determining AI "intelligence". One of the first AIs in the early 60s was, in fact, a checkers program that managed to beat experienced amateurs. Today AI is pretty much unbeatable in games like this and is able to teach itself games at astonishing rates. An example of this is Google's AlphaZero chess engine, which trained itself to play chess in under 4 hours to a superhuman level by simply playing itself over and over[1]. Given more time, a 9-hour trained engine was able to defeat the most notable engine of the time, Stockfish 8.

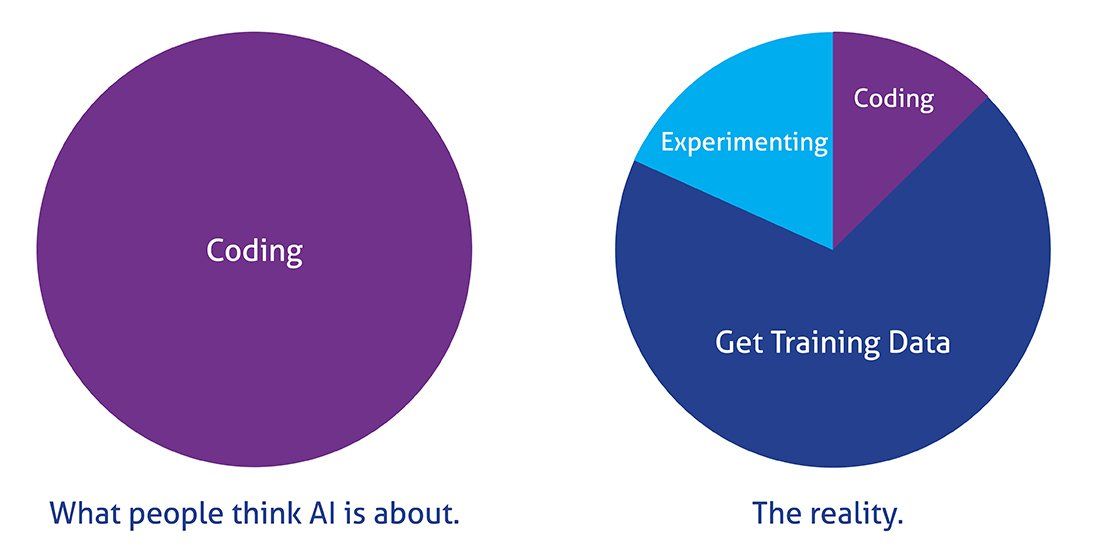

Training and machine learning is at the heart of AI. AI needs a lot of previously categorised data to learn from. For board games this is relatively simple, as the engine can effectively play itself and learn along the way. There is no consequence to losing a board game. Clearly this is not the correct approach for all scenarios. In a fully autonomous vehicle mistakes are critical, and needless to say a trial and error approach to learning driving could be deadly. An autonomous vehicle AI actually learns from a human driver as an example. For an AI to be effective at driving it requires thousands of hours of observed inputs.

Extensive training is the reason why AI might not be applicable to all problems. Facebook and Google have access to millions of images, data points, etc. to train their AI algorithms. Obtaining thousands of data points and categorising these for your needs is very difficult and time consuming for a smaller company that's just starting out. However, as long as the function is non-critical, an AI system may be able to train itself over time, given a pre-built model of similar data.

Figure 3. A realistic picture on what AI is all about. A large part of AI "coding" lies in getting and categorising sufficient training data.

The Magic of AI

There are by now several examples of AI being used in medicine to predict patient current and future ailments. A prominent example of this is Deep Patient, an AI which has learned to predict conditions like liver cancer and is also strangely good at diagnosing complex disorders like schizophrenia. It seems to do this far better than most doctors can. How does it do this though? As of today, the answer is, no one knows. Deep Patient, much like most AIs, is a complex network of neural pathways that have been tuned through the use of a huge data set. The output is currently a result of optimised math that makes no sense to someone looking at the final system and is a prime example of one of the issues with AI. Although an AI has found a solution to the problem, we currently have no concrete way of verifying the end result's reliability, nor of any prejudices in the system due to biased data.

Google's Ali Rahimi, an award winner at the 2017 Conference on Neural Information Processing (NIPS)[4], likened the current state of AI to the medieval magic of alchemy. Some things just seem to work. In medieval times these examples were metallurgy and glass-making. The processes around these examples worked like a charm when using the scientific knowledge of alchemy at the time, whereas other things like turning common metals into gold simply didn't. AI is much the same at the moment, where some architectures, processes and optimisations seem to work for specific problems, whereas other attempts at solving problems though AI do not. There is currently no full understanding of the processes that underlie machine learning. For alchemy, we now understand that magic is not the right explanation, but we still need to fully figure out the mysteries of AI.

An interesting attempt at understanding the inner workings of AI has been made by Google in its Deep Dream project[2], which I'd encourage the reader to check out. It is a tool for finding things like faces in images, but can also be run in reverse to see what an optimised and biased AI might be looking for. The result is strange images that are now classified as a new art form.

Figure 4. Example image from Google's Deep Dream project. An example of what AI may see when it tries to look for patterns.

Problems with AI

Deep learning and AI does have its downsides, and there are a few key reasons for this, which have been outlined by Gary Marcus, CEO of Geometric Intelligence (an AI company now acquired by Uber)[3]:

- AI is greedy

It requires huge sets of training data. - AI is brittle

If the AI is transferred and confronted with scenarios which differ from training data, the AI is very likely to break. - AI is opaque

AI is effectively a "black box" whose outputs cannot be explained. Parameters of neural networks exist in mathematical geography and are not debuggable like traditional programs. - AI is shallow

AI does not have any innate knowledge and holds no common sense checks about the world.

There is current research into "Artificial General Intelligence" which might make AI less shallow and less brittle by providing a basic model of the world, but such a feat seems incredibly complex. Research is continuously also trying to fully understand how to best optimise and architect AI, which is likely to make it less opaque and less greedy, if we knew exactly what data needs to fed to AI. However, for the moment it seems we are a little way off from any of these goals. We could experience another AI winter before these problems are solved.

There are also a few social arguments against AI. An extreme example of this is an AI that was used in prison systems[5]. The goal of the AI was to try and predict which prisoners were likely to re-offend once they were released. As people of certain races are over-represented in prison populations already, it appeared that the AI ended up having a heavy racial prejudice itself. It seems as though prejudices held by humans have also been learned by this AI. This general human bias likely extends to all forms of AI, such as that which determines your search results and the the advertisements you see online. As the AI has been trained by a multitude of previous users, there is likely to be an inherent bias in this data to a general worldview. The full extent of the bias, as argued above, is completely unknown as the AI behaves as a black box. There is caution in the community that AI might be breeding intolerance, may be influencing elections, and could be damaging individual and original thought in general.

Embedded AI

AI has ingrained itself in the embedded world. Currently the common AI approach has been to send data from a simple IoT device to the cloud, where the more complex and involved AI and machine learning algorithms run. This is because AI algorithms often require a lot of computational power. Once trained on more powerful computers, the AI can be transferred onto more local specialised hardware. IC developers are currently working on integrating AI accelerators into the silicon of microcontrollers, which should eventually allow for faster processing and better allow these algorithms to run and train locally.

Conclusions

AI is now everywhere, and it has proved itself to be a powerful tool that has helped us solve or given insight to a variety of problems. The black box nature of AI and our lack of thorough understanding of it means that critics think of it as unreliable and dangerous (Elon Musk has stated it's more dangerous than nukes[6]). Nevertheless, AI seems to have found its niche in the market for non-critical applications where it is able to find patterns and make predictions far better than most humans.

If you have a problem which needs pattern recognition then it may be worth considering incorporating AI into your next project. Contact us to talk to someone in our knowledgeable team.

References

- AlphaZero Engine

https://en.wikipedia.org/wiki/AlphaZero

- Google's Deep Dream Generator:

https://deepdreamgenerator.com/

- Greedy, Brittle, Opaque, and Shallow: The Downsides to Deep Learning - Jason Pontin:

https://www.wired.com/story/greedy-brittle-opaque-and-shallow-the-downsides-to-deep-learning/ - Ali Rahimi's talk at NIPS (NIPS 2017 Test-of-time award presentation)

https://www.youtube.com/watch?v=Qi1Yry33TQE

- MIT Technology review: AI is sending people to jail—and getting it wrong - Karen Hao:

https://www.technologyreview.com/s/612775/algorithms-criminal-justice-ai/ - CNBC: Elon Musk: ‘Mark my words — A.I. is far more dangerous than nukes’ - Catherine Clifford:

https://www.cnbc.com/2018/03/13/elon-musk-at-sxsw-a-i-is-more-dangerous-than-nuclear-weapons.html

- Figure 3 retrieved from Common mistake: AI is all about building neural nets, https://hackernoon.com/%EF%B8%8F-big-challenge-in-deep-learning-training-data-31a88b97b282

- Machine Learning & Artificial Intelligence image via www.vpnsrus.com

- Banner image retreived from https://pixabay.com/photos/artificial-intelligence-robot-ai-ki-2167835/