Artificial Neural Networks on the Edge

Date: September 24, 2020

Introduction

As Artificial Intelligence (AI) becomes more prolific, we at Beta Solutions, are increasingly investigating how these technological advancements can unlock new and unique features for our clients' projects.

This article takes an overview of Artificial Intelligence and explores it’s fundamental building blocks - namely the Neural Network. Finally, we look at the suitability and considerations of operating Neural Networks on the Edge.

Don’t know what the ‘Edge’ or a ‘Neural Network’ is? Then read on …

Background of Neural Networks

You have probably heard the terms, Artificial Neural Networks (ANN), Convolutional Neural Network (CNN), Artificial Intelligence, or Machine Learning. These are synonymous terms, all based upon the concept of training a computer algorithm to operate similar to the way your brain learns. Artificial Neural Networks are now beginning to be used everywhere - from algorithms that recognise the words you say (e.g: “Hey Siri”), to Tesla's driverless cars, to which ads to show you, to some quite Orwellian types of camera surveillance that are in use in some countries. You may be forgiven to think that neural nets are a fairly recent development but in actual fact research in neural nets has been going on for a long time, back to the late 1940s[1] with the term ‘Artificial Intelligence’ (AI) coined by John MacCarthy who, in 1959, co-founded MIT’s Artificial Intelligence Lab[2].

In regards to AI research, there have been a number of periods of boom and bust over the years. One of the reasons for the limited use of AI back then was the limited processing power that was available. Indeed, AI networks require a huge amount of processing power to train them, and a moderate amount of computing power to operate. Now, with large cloud farms of parallel computing and arrays of GPUs easily available, practical large AI networks can be trained in a relatively short amount of time - not to mention some amazing advances in ANN training algorithm development. Also, given that your mobile phones have more and more processing power, it is relatively simple for them to run smaller neural networks to process things such as voice. Where AI was once a buzz word for researchers and science fiction for writers alike, now AI is just another tool on the belt for engineers and programmers to use for solving classification problems such as voice, image analysis, and other problems that can be labelled.

However, there are some caveats to what neural networks can be used for. As much as I enjoy a good sci-fiction book (such as Issac Asimov’s 1950’s Robot series, where Robots ‘General AI’ are able to self learn and apply their processing to many different problems) we are still very much in the ‘Narrow AI’ era where AI can solve very specific ‘narrow’ problems such as image identification or speech recognition. With a ‘Narrow AI’ a large amount of training data is required to classify your problem and your solution is only ever as good as your data.

How does Artificial Neural Network work?

An Artificial Neural Network takes inspiration from how our brains work. A child sees a cat and her Dad tells her it is a cat - which trains the child that this ‘is a cat'; the child then sees a dog and her Mum tells her it is a dog - which trains the child that it is 'not a cat’. See enough cats and the child becomes quite proficient at identifying cats and ‘not cats’!

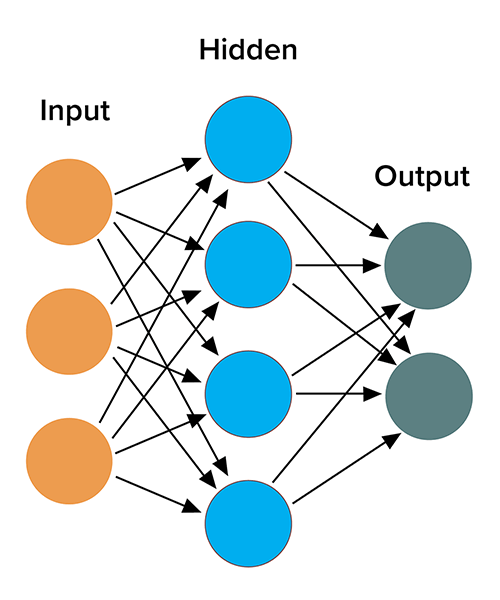

A simplified Neural Network is shown in the diagram below. It involves the input of data (such as camera data), which in turn is presented to a number of hidden weighted nodes (lots of nodes and layers), then after considerable maths (multiplication and addition), a weighted value is outputted - which can be used to identify the object of interest.

Figure 1: Artificial Neural Network [3]

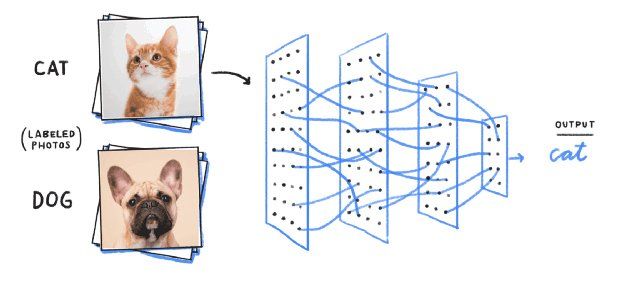

The Convolutional Neural Network (CNN)[19] also should be considered. These are typically used for image or speech processing. These allow for spatial recognition such as where ones eyes are in relation to ones nose. etc. - which can help significantly with image recognition.

Figure 2: Convolutional Neural Network[21]

The basic/simplified steps to train a neural network are:

- Capture data,

- Label data,

- Train NN,

- Run NN.

If you were trying to make an ANN that could identify cats, then you would need to capture data of a large number of images of cats that you as a human has labelled as a cat, and an equally large number of images that you know are not a cat. The ANN is then trained by an optimising algorithm (which takes a lot of processing power) that adjusts the weights of the hidden nodes until the output gives a high number if ‘it is a cat’ and a low number if it is ‘not a cat’. Now you can run the ANN which - when presented with a camera image of a cat - will give a probability that the image is a cat. But try and remember the results are only as good as your data!

NB: There is a lot more that could be said here, but this is the basic concept.

One main disadvantage of AI over traditional algorithm methods is that it is very much a black box design. You never know quite what is going on inside the model:

- Data in → ‘Magic’ multiplication and addition → Data out!

Also note that an ANN will never be 100% correct, there will always be statistical outliers, much like we as humans get things wrong but we have the advantage of being able to problem solve or know that we do not know what it is. In most cases however, good enough is good enough.

Artificial Neural Network Tools

There are a large number of tools out there now that can be used to train networks from the high-level API cloud-based or control with python based scripts for image classifiers (such as Google Cloud AutoML[11], Microsoft Azure computer-vision[12], and Amazon Rekognition[13]) where you upload images and classify them into folders, then call a simple web API to find out what is in your picture. These tools typically use a Convolutional Neural Net (CNN) and various other magic sauces under the hood. These are relatively easy to use and little background knowledge in computer vision or CNNs are required to use them. However, these solutions do limit you to using that specific provider which incidentally are usually not compatible with portable / “on the edge” devices.

The next step in complexity and flexibility is to train your own ANN. The main tools for this are open-source, with the main tool being Google's developed Tensor Flow[14], and Berkeley’s Caffe[15]. TensorFlow is probably the easiest tool to use with a Python interface and is relatively easy to get up and going, with a large number of tutorials on the web and a number of different use cases[16].

To improve speed and decrease the memory requirements on embedded devices CNN models are usually converted to a TensorFlow Lite model. TensorFlow Lite typically quantizes the model - this means all the weights are converted from floating-point (32-bit) to 8-bit integer. This, in turn, means that only 8-bit calculations are required, which essentially enables the NN to operate on much smaller/embedded processors. Although quantization will reduce the accuracy of a model by roughly 3% (depends on each model) the benefits are considerable - specifically the huge memory storage savings (~4x) and significant energy savings[7][8]. Sometimes pruning can also be run, which removes weights that have little effect.

Artificial Neural Network Training

Training ANN can take an immense amount of cloud processing time and requires a huge number of labelled images and time to classify. Recently it has been discovered that a way to decrease the cloud computing time is to use an already trained CNN and re-train on a new set of specific images - which is called “transfer learning”.

There are two methods of transfer learning.

- The first method involves fine-tuning the pre-trained model. This can be susceptible to over-fitting (for example, operating well for the training images but not new ones) and still takes time and a large number of additional training images.

- The second method involves “feature-transfer”. The pre-trained CNN is exposed to the training set for the specialised task, and information is then extracted from the intermediate layers of the CNN, capturing low-to-high level image features (such as the number of legs on an insect). The feature information is then used to train a simpler machine learning system, such as a support vector machine (SVM), on the more specialised task. Feature transfer in combination with SVMs can work better than fine-tuning when the specialised task is different from the original task. It is computationally more efficient, works for smaller image sets, and SVMs are less susceptible to over-fitting when working with imbalanced data sets, that is, data sets where some categories are represented by very few examples.

Most of the pre-trained networks used for feature transfer come from the ImageNet challenges in which a number of competitions were run, in which large numbers of labelled images were made available for the best CNN to identify the images the most accurately. These are quite big networks. The winning networks have < 10% error which is on par with the human-level performance[19]. The most common of these networks are:

- AlexNet

- GoogleNet

- VGGNet

- ResNet

Embedded Processors

A look at the Edge:

‘The Edge’ is a term which basically means processing data on the embedded device, rather than in the cloud. This can have a number of advantages. For example, if you are running a cellular device with a camera on the device, sending every image back to the cloud server will use considerable data - not to mention the cost in cloud computing time. However, if you can run an ANN algorithm on the embedded device (a.k.a The ‘Edge’), then only the data you are interested in needs to be transmitted to the cloud server. The downside of this is that the embedded device needs to have enough computational power to run the ANN. This is especially important for video applications where it is important to have high frame rate identification of objects.

Can ANNs operate on the Edge?

The short answer is “YES”. Advancements in embedded processing power, low power processors and more efficient Neural Networks mean many ANNs can now be run on the Edge.

Of course, the longer answer is “IT DEPENDS”. There are a wide range of factors to consider - including restrictions on Power, Memory, Processing Power, Overall Price etc. All of these factors must be balanced - when considering whether or not to operate an ANN on the Edge.

For a more detailed and technical explanation of various development approaches available to the engineer - read on.

Embedded System on Chips (SoC’s)

There are more and more embedded System on Chips (SoC’s) emerging that are capable of ANN processing at the Edge[5]:

- Raspberry Pi

- Movidius NCS

- NCS2

- Nvidia Jetson

- Coral USB Accelerator

- (A plug-in USB peripheral that could be used in conjunction with (say) a Raspberry Pi)

Figure 3: Running neural networks on embedded systems.[22]

Benchmarking has shown that these ANN accelerators can get pretty close to a high-performance PC performance[5]. However, these embedded devices still use a lot of power and so are likely not suitable for battery power devices. They can also be relatively expensive, so will be only suitable for some embedded applications.

The Raspberry Pi 4 is probably the most affordable, most accessible way to get started with embedded machine learning right now. One can use it on its own with TensorFlow Lite for competitive performance, or with the Coral USB Accelerator from Google for ‘best in class’ performance. However, the idle current draw was between 410 and 600mA (~4 hours on a pair of AA batteries) with peak current 860 – 1430mA @ 5V (~3 hours on a pair of AA batteries).

For a commercial solution similar to Raspberry Pi, Nvidia's Jetson device could also be a good option. Google’s Coral SoC can perform at 4TOPS at 8-bit, at 2TOPS/Watt[17], but this requires PCIe or USB 2.0 to interface to it. Therefore while low/lower power, it still requires a higher-end Linux type embedded device with large amounts of memory to interface to it.

Another approach for small ANN’s would be to run a low power processor such as a commonly available ARM Cortex-M4. These can allow for ~1uA sleep current (+10 years on AA batteries) and can have very efficient uA/MHz power usage. These usually are fairly limited in FLASH and RAM with the largest around 512kb of RAM and up to 1Mb of internal flash which limits the size of the ANN that can be run. However, the ANN can be stored in an external SPI flash. This approach may work for smaller ANN’s or if low operation speed is acceptable. The ARM Cortex-M CMISS-NN software framework can also provide up to a 5x boost compared to not using this method [11]. The basic approach to running with these MCUs is to trigger a wake-up and run the ANN when required. Another example of a Cortex M4 framework is ST Micro’s ‘STM32Cube.AI’ framework[18]. This helps to simplify the process to get your low power embedded device running a Neural Network. This supports a number of different processors in the STM32 lineup.

A low power approach is to use one of the new dedicated CNN (Convolutional Neural Net) SoC’s that are now coming out such as the Chinese chip Kendryte K210 [6]. This SoC is a “low end” MCU for ANN but packed an impressive punch of processing power. It quotes 1-Tera Operations per second (TOPS) with an 8-bit quantized network at around 0.3Watts. It can run a small CNN ~5-6MB at QVGA@60fps or VGA@30fps. Alternatively, if you have a large CNN you can store in external flash and run from there (though at a slower rate). It is a very affordable option compared to most SOCs, and much lower power. The processor has two 64-bit RISC-V CPUs each with an independent Floating Point Unit for additional processing. The maximum (quantized) network size is 5-6MB when using the on-board memory. Typical power consumption is < 1W and 200-300mA[9].

One way to accelerate CNNs on the Edge is to use FPGA’s and ASICS [5] [13]. FPGA’s typically require the network to be quantized which reduced the accuracy by roughly 3%. Most research done with FPGA’s only uses small CNNs. FPGA implementations can give a 15% speedup compared to an MCU solution alone[7]. FPGAs can be complex beasts and power supply and power requirements are not insignificant.

There are a large number of modules out there for doing AI on the Edge with some found at GitHub repo collection of Edge AI devices[10], or for vision-based solutions see edge-ai-vision.com[4]. More and more processors are including CNN processing accelerators.

Figure 4: List of Embedded Vision AI ICs.[4]

Conclusion

Operating Artificial Neural Networks on the Edge is now possible even with fairly low power MCUs. This unlocks exciting opportunities for AI based applications that were previously not possible with non-AI algorithms - such as image analysis and classification, etc.

However, there are still a wide range of factors and trade-offs to consider if developing an ‘AI on the Edge’ type product. Power consumption, processing power limitations, and overall cost are three important such factors.

Do you have a product idea that might benefit from Embedded Artificial Intelligence? Contact or call Beta Solutions to see how we can help you!

References:

- https://en.wikipedia.org/wiki/History_of_artificial_neural_networks

- Isaacson, Walter. The Innovators: How a Group of Hackers, Geniuses, and Geeks Created the Digital Revolution (p. 203). Simon & Schuster. Kindle Edition.

- By

Glosser.ca - Own work, Derivative of File: Artificial neural network.svg, CC BY-SA 3.0,

https://commons.wikimedia.org/w/index.php?curid=24913461

- https://www.edge-ai-vision.com/resources/industrymap/

- https://www.hackster.io/news/the-big-benchmarking-roundup-a561fbfe8719

- https://canaan.io/product/kendryteai

- TinyCNN: A Tiny Modular CNN Accelerator for Embedded FPGA

- William Dally. High-Performance Hardware for Machine Learning.

Tutorial, NIPS, 2015

- K210 Datasheet

- https://github.com/crespum/edge-ai - Connect to preview

- https://cloud.google.com/vision

- https://azure.microsoft.com/en-us/services/cognitive-services/computer-vision/

- https://aws.amazon.com/rekognition/?p=tile&blog-cards.sort-by=item.additionalFields.createdDate&blog-cards.sort-order=desc

- https://www.tensorflow.org/

- https://caffe.berkeleyvision.org/

- https://www.tensorflow.org/learn

- https://coral.ai/products/accelerator-module/

- https://www.st.com/content/st_com/en/stm32-ann.html

- CNN Architectures: LeNet, AlexNet, VGG, GoogLeNet, ResNet and more…

- https://www.youtube.com/watch?v=K_BHmztRTpA

- https://www.analyticsvidhya.com/blog/2020/02/cnn-vs-rnn-vs-mlp-analyzing-3-types-of-neural-networks-in-deep-learning/

- https://www.quantmetry.com/neural-networks-embedded-systems/